Docker Explained: Finally Understand Containers Without Losing Your Mind (Probably)

Your code deserves better than deployment chaos. Let's break down Docker, images, layers, and containers using logic, sarcasm, and the miracle of a well-packed lunch.

(Estimated Read Time: 7 minutes)

(Keywords: Docker, Containers, Dockerfile, Docker Image, Layers, Docker Build, Docker Run, Containerization, DevOps, Software Development, Explanation)

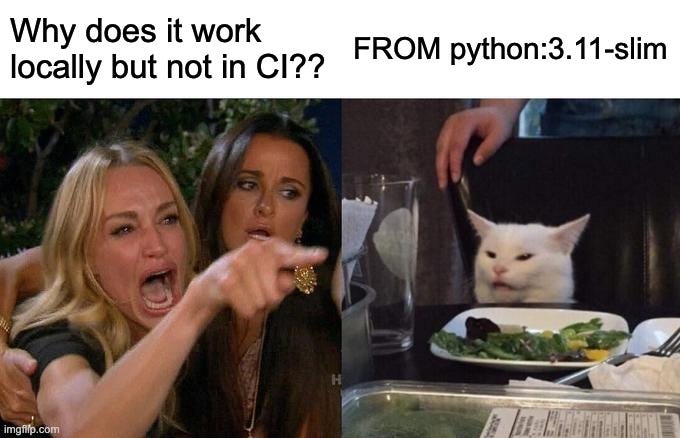

Let's be real. If you've ever coded anything more complex than a blinking cursor, you've likely uttered the cursed phrase: "But... it works on my machine!" That beautiful, soul-crushing moment when your masterpiece runs perfectly on your laptop, but deploy it anywhere else? It explodes spectacularly, often in ways that defy logic and induce existential dread.

It's like crafting a gourmet meal in your pristine kitchen, using your perfectly calibrated oven and your favorite artisanal sea salt. Then, you try to recreate it at a friend's place using their questionable microwave, a single blunt knife, and spices of unknown origin and vintage. It’s a recipe for disappointment, possibly food poisoning.

For years, deploying software felt a lot like that: a frustrating gamble plagued by missing dependencies, conflicting versions, and environments that were about as consistent as weather forecasts. Then came Containers, and the tool that brought them to the masses: Docker. They promised a way out of this dependency hell.

The Special Hell of "Works On My Machine": The Actual Problem

Before containers, installing an application felt like performing a dark ritual. You had to install the exact right version of the database, the specific library for image processing that was blessed by the full moon, the precise web server configuration... all while praying they wouldn't clash with each other or with the dozen other things already running on the server.

Every machine was its own unique snowflake of chaos (an "environment"). Your laptop, the test server, the production server – all subtly (or wildly) different. And your poor application was expected to just deal with it. The result? Phantom bugs, wasted days configuring things, and a strong urge to take up goat farming.

The Magic Fix: The Perfect Damn Lunchbox (Containers Explained)

Imagine instead of trying to cook in that disaster zone of a kitchen, you meticulously prepare your perfect meal at home and pack it in an impeccably organized, possibly slightly obsessive, meal-prep container. That, in essence, is a Container.

A Container is a standardized, isolated package that holds:

Your Code: The actual application, the gourmet meal you slaved over.

All Its Dependencies: The exact tiny organic chia seeds, the specific gluten-free croutons, the precise version of that obscure Python library it inexplicably needs. Everything that application requires, and nothing else.

Runtime Instructions: How to "heat it up" or run the application correctly.

Why is this lunchbox approach so great?

Isolation: What's inside the box stays inside the box. Your meticulously prepared quinoa salad doesn't start smelling like the leftover fish curry someone else brought. A container runs in isolation from other containers and the host OS. Goodbye, conflicts!

Consistency: The meal tastes the same whether you eat it sadly at your desk or triumphantly in the park. A container runs exactly the same way regardless of where you run it – your laptop, a test server, the cloud. Goodbye, "Works on my machine!"

Portability: Easy to grab your lunchbox and go. Easy to move a container image between environments. Lightweight and hassle-free (mostly).

Who Packs This Thing? Enter Docker

Okay, the perfect lunchbox/container concept is neat. But who actually makes it? That's where Docker comes in.

Docker is NOT the same as a Container. Docker is the main tool (think the "Kleenex" or "Tupperware" brand name of the container world) that lets you build, share, and run these containers. Think of it as the overly-organized meal-prep guru in your life:

1. The Hyper-Specific Recipe (Dockerfile)

First, Docker needs instructions. You don't just hand it ingredients; you give it a Dockerfile. This plain text file is your neurotic, step-by-step recipe detailing exactly how the lunchbox must be packed.

Take this Dockerfile for example:

FROM python:3.11-slim WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD [“python”, “main.py”]

FROM python:3.11-slim: "Start with the 'Python3.11' model empty lunchbox."WORKDIR /app: "Designate the main compartment as '/app'."COPY requirements.txt .: "Put the list of required spices inside."RUN pip install -r requirements.txt: "Install those exact spices into the compartment using the 'pip' spice grinder."COPY . .: "Now, put my main dish code inside."CMD ["python", "main.py"]: "Label it: 'Run the main dish program when ready to eat'."

2. The Chef & The Magic of Layers (docker build)

With the recipe (Dockerfile) ready, you tell Docker "Build!" (docker build). This kicks off the assembly line. But Docker is smart (or efficiently lazy). It builds the lunchbox blueprint – called an Image – using stackable, reusable Layers:

Base Layer: The initial

FROMinstruction grabs the base lunchbox model (e.g., Ubuntu OS). This layer rarely changes.Intermediate Layers: Each subsequent instruction (

RUN,COPY, etc.) usually adds a new transparent tray on top. Installing tools? A layer with utensils. Installing dependencies? The layer with the pre-washed lettuce and specific dressing packet.Top Layer: Copying your application code often forms one of the final layers – the actual main dish placed on top.

(Visualize the Image blueprint):

The Genius Part: Layer Caching. When you build again, Docker checks your recipe. If an instruction hasn't changed (you used the same lettuce), Docker reuses the cached layer from the last time! It only rebuilds starting from the first changed instruction. Changed only your app code (top layer)? The build reuses all the heavy base layers and finishes in seconds, not minutes. It's like the chef grabbing the pre-made salad base and just adding your new garnish. This makes development incredibly fast.

The final output of docker build is the Docker Image: the sealed, read-only, layered blueprint of your perfect lunchbox.

3. The Logistics Expert (docker push/pull)

Docker lets you store these Images in Registries (like Docker Hub). Think of this as the giant, organized online freezer full of perfectly labeled meal boxes (Images). You can push your custom-made image there to share it, or pull images made by others. This is how you distribute your application's blueprint.

4. The Universal Microwave (docker run & The Container)

An Image is just the blueprint (the frozen meal). To actually run your application (eat the lunch), you use docker run <your-image-name>. This command:

Takes the read-only Image layers (the frozen blueprint).

Adds a thin, writable layer on top (like a napkin for crumbs or temporary notes during the meal).

Starts your application process inside this new structure, following the

CMDinstruction from your recipe.

This live, running thing is the Container.

Image vs. Container:

Image: Read-only blueprint; the frozen meal box. Build once.

Container: Running instance based on an Image; the meal being eaten now. Can run many from the same Image. Each is isolated with its own writable layer for temporary changes.

Your application springs to life, snug and isolated in its box, running exactly as intended thanks to the meticulous packing job.

So, What's the Big Deal? (Real-World Benefits Revisited)

This whole elaborate lunchbox system gives you:

Faster Development & Deployment: Quicker builds (layers!), easy sharing (registries!), reliable execution (

run).True Consistency: The "works on my machine" excuse is finally dead (mostly). Hallelujah.

Better Resource Usage: Containers are lighter than full virtual machines. More apps per server = less money spent.

Improved Isolation & Security: Apps stay in their lanes, reducing conflicts and potential security issues.

Enables Modern Architectures: Microservices basically require containers to be manageable.

The catch? Managing hundreds of these lunchboxes efficiently requires an orchestrator (like Kubernetes), which builds on top of Docker/containers. But understanding this foundation is key.

Conclusion: The Lunchbox Revolution is Here to Stay

Containers aren't just a fad; they're how modern software gets built and run. Docker makes this powerful technology accessible. By packaging your code and dependencies into these consistent, isolated lunchboxes (Images) and running them reliably (Containers), you eliminate a massive source of developer pain and deployment nightmares.

Of course, once you've mastered packing and running a single lunchbox, new questions arise. How do you handle data that needs to stick around even if the container stops, like databases or user uploads? (That's where Docker Volumes come in). How do different containers talk to each other, or how do you access your running web application from your browser? (Look into Docker Networking and port mapping). And what if your application is a multi-course meal, requiring several services like a web frontend and a database to run together seamlessly, especially during development? These concepts are the next layer to mastering the containerized world.

Understanding Dockerfiles, layers, images, and containers is essential tech knowledge now. So embrace the tupper. Your sanity (and your team) will thank you. What’s your worst ‘works on my machine’ moment?